The August 2014 meeting of the Houston Recreational Computer Programming Group was held this past Sunday, on the 10th. Pretty good attendance this time, as has now become rather usual. Last month, I failed to provide a writeup for the July meeting, so that is included here too, below.

This month, Stephen Cameron demonstrated the work he and Jeremy Van Grinsven (mostly Jeremy) have done in Space Nerds In Space for OpenGL rendering of planetary rings, and the shadows cast by those rings on planets, and the shadows cast by planets onto planetary rings. To summarize, a 1-dimensional texture is used to define a cross section of the ring. In the fragment shader for the rings, rays are cast back towards the light source and tested for intersection with the sphere of the planet to determine whether a given part of the ring is in shadow or not. In the fragment shader for the planets, rays are cast towards the light source and tested for intersection with the rings, and where in the ring texture such an intersection occurs, and the alpha (transparency) channel of the ring texture is used to determine how much to shade the surface of the planet at a given location.

Here are some slides from a presentation about how planetary rings and associated shadows are rendered in Space Nerds In Space that gives a bit more detail (use arrow keys to navigate, press F11 for full screen.)

Chris Cauley presented a parameterized OpenSCAD script for a kind of 3D printable fabric, coupled with a customized OpenJSCAD implementation with a custom widget for specifying the layout of the fabric. This can be viewed on his fork of OpenJSCAD, where you can change the dimensions and even change the shape of the fabric.

I am very late in writing up the July 2014 meeting. Let me rectify that now. Here's what went on at the July meeting.

Stephen Cameron showed a ReactionDiffussion simulation written in Processing and based on a reaction diffusion tutorial by Karl Sims. It models a system of two virtual chemicals, A and B reacting and diffusing on a 2 dimensional grid. The 'A' chemical is added at a given rate. B is removed at a given rate (as if evaporating) Two instances of 'B' combine with 'A' to produce a three instances of 'B', as if B uses A as food to reproduce. Both chemicals diffuse through the grid, but A diffuses faster than B.

For each grid cell in the simulation, 2 numbers are tracked, which represent the concentrations of each chemical at that location. These concentrations are updated according to the Gray-Scott model:

New values, A' and B' are computed as follows:

A' = A + (Da convolution(A) - A*B*B + f * (1 - A)) * DeltaT

B' = B + (Db convolution(B) + A*B*B - (k + f) * B) * DeltaT

Da and Db are the diffusion rates of A and B, and are 1.0 and 0.5.

f is the feed rate of A, and is 0.055.

k is the kill rate of B, and is 0.062.

DeltaT is 1.0;

The convolution matrix used is a 3x3 matrix:

0.05 0.2 0.05

0.2 -1.0 0.2

0.05 0.2 0.05

The grid is initialized with A = 1.0 and B = 0.0 and them some small areas may be seeded with B = 1.0.

It is very sensitive to the feed and kill rates.

It so happened that Chris Cauley did some work in this area in grad school, and so he explained a bit about how the values of the convolution matrix were arrived at and why this was equivalent to some other means

of solving the differential equations, but the details of this explanation now escape me.

Above, some output of the reaction diffusion program.

Links:

- Reaction Diffusion tutorial by Karl Sims.

- Robert Munafo's web site: Reaction-Diffusion by the Gray-Scott Model: Pearson's Parameterization

- A progrma for using reactiondiffusion algorithm to produce images by CNC routing:Reactor.

- Ready "... a program for exploring continuous and discrete cellular automata, including reaction-diffusion systems, on grids and arbitrary meshes.

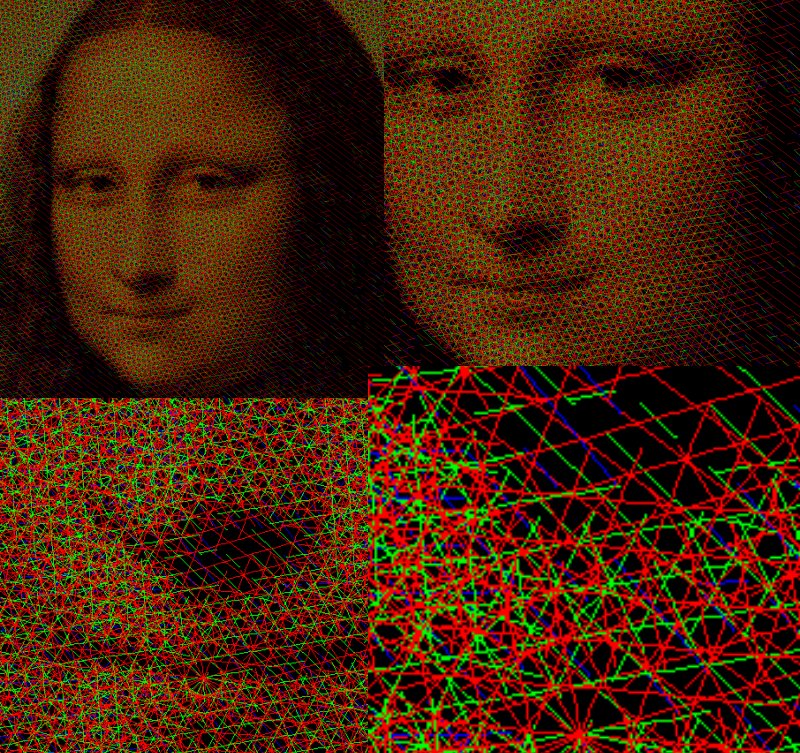

Stephen Cameron also showed an enhancment to his crosshatcher program to produce RGB images in addition to black and white images.

The algorithm works by producing several layers of parallel lines, each set of parallel lines rotated to various angles over the source image. Each line is drawn as a series of very very short line segments. For each line segment, the underlying source image is sampled, and if the corresponding location on the sourceimage is "dark enough", the line segment is drawn, otherwise, it is omitted. For each layer of parallel lines, the "dark enough" threshold is raised. This seems to be enough to produce a reasonably good looking crosshatched image. To extend this to work with RGB, three sets of layers are computed, one for each color, red, green and blue. Each color layer is significantly rotated relative to one another. The "dark enough" criteria is confined to a single channel, red, green or blue, but otherwise is identical to the black and white case. Some sample output:

Above, the same image at several magnifications

Ru presented some work he has done while in the process of penetration testing some device firmware to add support for a rather esoteric microprocessor (exactly which one I forget) to the IDA debugging and decompiling software. I'm afraid I cannot provide more details about this presentation. Guess you had to be there.

Chris Cauley presented some work he did with a Javascript implementation of OpenSCAD to produce some 3D printed parametric flexible snakes. More info about the 3D parametrix flexible snakes maybe found here.